Let's Build an OpenAI-style Text-to-Token Visualizer

How GPTs turn any text into universal tokens with UTF-8 & Byte Pair Encoding (BPE)

In my previous article, I talked about how GPTs process text into tokens.

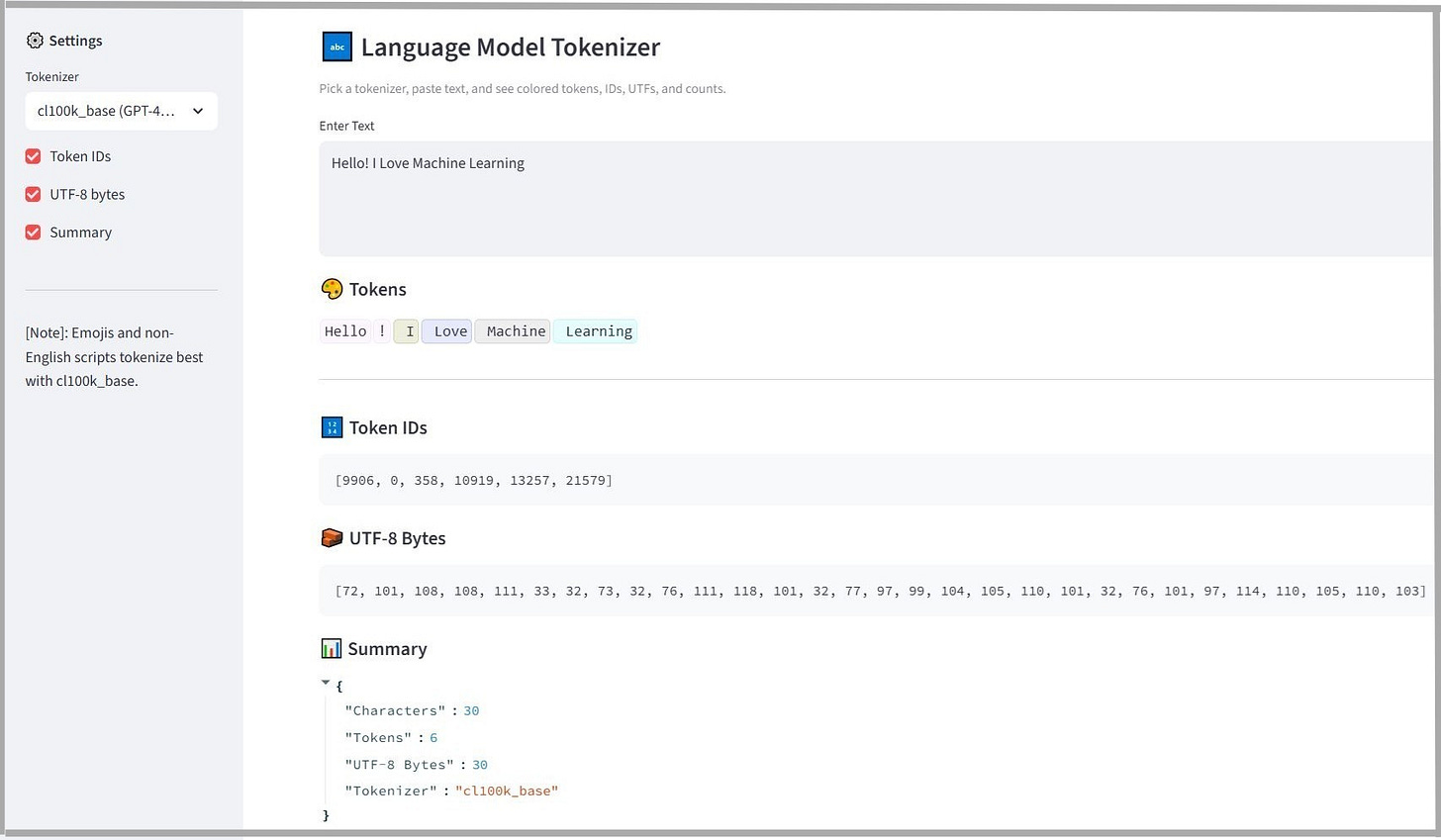

Here, I have created a simple implementation of the logic - in OpenAI style.

Specifically, a lightweight Streamlit app that shows how text is broken down into tokens - the basic building blocks that GPT models use to understand language.

Simply paste any input, pick a tokenizer (cl100k_base, gpt2, p50k_base, r50k_base), and generate tokens, token IDs, and UTF-8 bytes.

The results can vary depending on the tokenizer that is used. I found that cl100k_base is similar to GPT‑4/3.5.

The Python implementation is available on GitHub.